Introduction

What Is an Impact Evaluation?

Outcome vs. Impact - What’s the Difference?

Types of Impact Evaluation

When to Conduct an Impact Evaluation

Who to Engage in the Evaluation Process

What Are Key Evaluation Questions (KEQs) in Impact Evaluation?

Why Is an Impact Evaluation Important?

Challenges and Limitations of an Impact Evaluation

How to Plan and Manage an Impact Evaluation

What Are the Different Evaluation Methodologies?

Deciding on the Best Evaluation Methodology

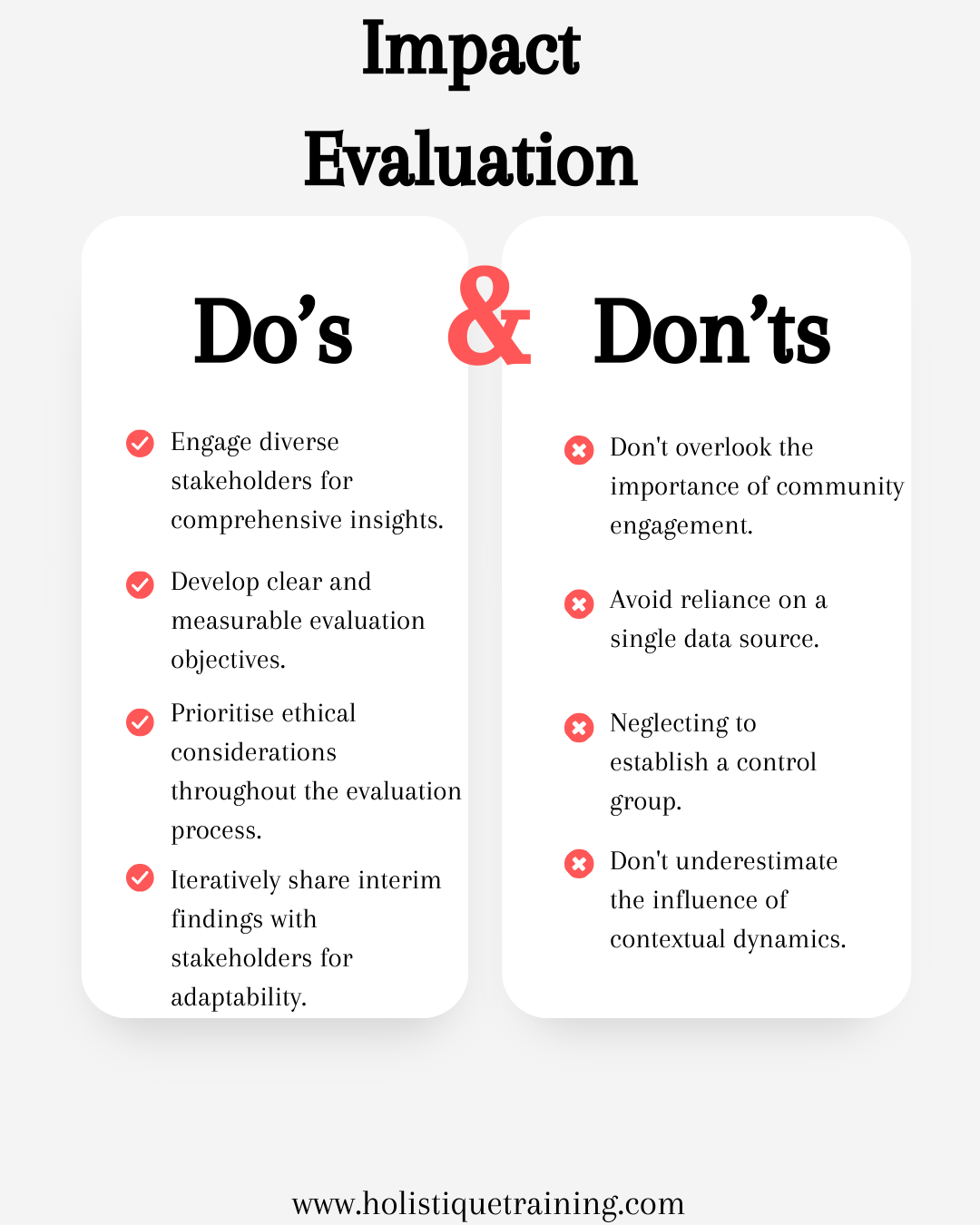

Best Practices for Conducting an Impact Evaluation

Example of Impact Evaluation in Practice

Conclusion

Introduction

As the world grapples with complex challenges, the need to assess the effectiveness of interventions and programs has become paramount. Enter impact evaluation, a methodical process designed to scrutinise the outcomes and impacts of initiatives with the precision of a skilled artisan shaping a masterpiece. In this exploration, we will delve into the intricate realm of impact evaluation, unravelling its purpose, methodologies, challenges, and the pivotal role it plays in shaping a more informed and impactful future.

What Is an Impact Evaluation?

At its core, an impact evaluation is a systematic and rigorous assessment that seeks to determine the causal effects of a particular intervention, program, or policy. The overarching purpose is to discern not only what changes occurred but also why and how they happened. In essence, impact evaluations aim to unearth the success or failure of initiatives in achieving their intended outcomes and broader impacts.

The goals are multifold. Impact evaluations strive to provide evidence-based insights, offering a nuanced understanding of the cause-and-effect dynamics within a given context. By discerning the impact of an intervention, decision-makers can make informed choices, allocating resources more effectively and refining strategies to optimise future outcomes.

Outcome vs. Impact - What’s the Difference?

Before delving further, it's crucial to distinguish between outcomes and impacts. Outcomes refer to the immediate and observable changes resulting from an intervention. These changes can be quantitative or qualitative and are often the stepping stones toward achieving broader impacts.

On the other hand, impacts are the more profound and lasting changes that stem from the cumulative effect of outcomes. While outcomes are the trees, impacts are the forest – the overarching transformation that reflects the ultimate success or failure of an initiative.

Types of Impact Evaluation

Impact evaluations come in various shapes and sizes, tailored to the unique characteristics of the intervention and the complexity of the context. Three common types include:

1- Randomised Controlled Trials (RCTs)

Randomised Controlled Trials (RCTs) stand as the gold standard in impact evaluation, akin to the meticulous strokes of a master painter crafting a masterpiece. The essence of an RCT lies in its random assignment of participants into either a treatment group, which receives the intervention, or a control group, which does not. This randomisation ensures that any observed differences between the groups can be attributed to the intervention, establishing a robust causal link.

The strength of RCTs lies in their ability to provide internally valid results, offering a high level of confidence in attributing observed changes to the intervention itself. However, their application can be challenging in certain contexts, especially when ethical considerations or practical constraints limit the feasibility of randomisation.

2- Quasi-Experimental Designs

Quasi-experimental designs serve as a bridge between the stringent control of RCTs and the real-world complexities of interventions. In situations where randomisation is impractical or ethically challenging, quasi-experimental designs offer a pragmatic alternative. These designs employ statistical techniques to create a control group that is as comparable as possible to the treatment group.

Unlike RCTs, quasi-experimental designs acknowledge the constraints of reality but still strive to establish a credible comparison between the intervention and non-intervention groups. Common quasi-experimental designs include matched-pair designs, difference-in-differences analysis, and regression discontinuity designs. While they may not achieve the same level of internal validity as RCTs, they provide a more feasible and ethical approach in many real-world scenarios.

3- Mixed-Methods Evaluations

Recognising the multifaceted nature of interventions, mixed-methods evaluations weave together both quantitative and qualitative data, creating a rich tapestry of insights. Like an artist blending different hues to evoke depth and emotion, mixed-methods evaluations aim to capture the nuances that quantitative data alone may overlook.

Quantitative methods offer statistical rigour, measuring changes in numerical terms, while qualitative methods delve into the lived experiences, perceptions, and context surrounding the intervention. Interviews, focus group discussions, and case studies are common qualitative tools employed in mixed-methods evaluations. This approach not only provides a more holistic understanding but also enhances the validity and reliability of the evaluation by triangulating findings from multiple sources.

In essence, the choice between these types of impact evaluation methods is not one-size-fits-all but rather a careful consideration of the intervention's characteristics, the available resources, and the specific research questions at hand. While RCTs set the bar for internal validity, quasi-experimental designs and mixed-methods evaluations offer practical and nuanced alternatives, ensuring that impact evaluation remains adaptable to the diverse landscapes of interventions and their contexts.

When to Conduct an Impact Evaluation

Timing is everything in impact evaluation. While it might be tempting to evaluate every initiative, practical considerations often dictate the decision. Impact evaluations are particularly valuable when:

A- The intervention aims for significant and lasting change.

B- The intervention is resource-intensive.

C- There is a need to understand the causal relationship between the intervention and observed changes.

D- The intervention's success is crucial for informing future decisions or policies.

Who to Engage in the Evaluation Process

Collaboration is a cornerstone of impactful evaluations. Engaging a diverse group of stakeholders ensures a more comprehensive and nuanced understanding of the intervention's effects. Key players in the evaluation process may include:

1- Program implementers

Program implementers, often the architects of the intervention, play a pivotal role in impact evaluation. Their intimate knowledge of the intervention's design, implementation, and challenges provides essential insights into the intricacies of the initiative. Engaging program implementers from the outset ensures that the evaluation is grounded in the operational realities of the intervention.

Through their involvement, program implementers contribute contextual knowledge, shedding light on the contextual nuances that may influence the intervention's impact. Their collaboration is essential not only for understanding the intervention's theory of change but also for uncovering potential implementation bottlenecks that may affect the observed outcomes.

2- Beneficiaries

Beneficiaries, the individuals directly impacted by the intervention, offer a ground-level perspective that is invaluable in understanding real-world outcomes. Their experiences, challenges, and aspirations provide a nuanced understanding of how the intervention translates into tangible changes in their lives. Engaging beneficiaries in the evaluation process ensures that the assessment aligns with the intended goals and reflects the lived experiences of those the intervention seeks to benefit.

Beneficiaries' input can illuminate unforeseen consequences, both positive and negative, and highlight areas where the intervention may need adjustments. Through surveys, focus group discussions, or interviews, their voices contribute to a comprehensive narrative that transcends statistical indicators, adding depth and authenticity to the evaluation findings.

3- Experts

Bringing in subject matter experts is akin to consulting seasoned musicologists to analyse a composition's theoretical underpinnings. Experts contribute a deep understanding of the field, grounding the evaluation in relevant theories and best practices. Their expertise ensures that the evaluation aligns with established standards and draws on the latest advancements in the field.

Experts can assist in crafting Key Evaluation Questions (KEQs) that are theoretically robust and methodologically sound. Their involvement also enhances the credibility of the evaluation process, providing external validation and ensuring that the assessment is rigorous and aligned with the broader body of knowledge in the field.

4- External evaluators

External evaluators, independent of the intervention's design and implementation, bring an impartial perspective to the evaluation process. Their role is akin to that of seasoned conductors guiding an orchestra with objectivity and precision. External evaluators contribute to the evaluation's credibility by mitigating potential biases that may arise from being closely associated with the intervention.

By offering an outsider's viewpoint, external evaluators enhance the reliability of the evaluation findings. Their independence fosters a sense of transparency and accountability, assuring stakeholders that the evaluation is conducted with rigour and integrity.

Harmonising the Ensemble: Best Practices in Stakeholder Engagement

To orchestrate a symphony of perspectives effectively, adhering to best practices in stakeholder engagement is essential:

1- Early Involvement

Engage stakeholders from the early stages of evaluation planning to ensure a shared understanding of goals and expectations.

2- Collaborative Decision-Making

Foster a collaborative environment where stakeholders actively contribute to key decisions, such as the formulation of KEQs and the selection of evaluation methodologies.

3- Effective Communication

Maintain open and transparent communication channels to keep stakeholders informed about the evaluation's progress, interim findings, and potential adjustments.

4- Diversity and Inclusion

Ensure that the diversity of stakeholders reflects the broader community or context of the intervention. Embrace inclusivity to capture a wide range of perspectives.

5- Feedback Mechanisms

Establish mechanisms for continuous feedback, allowing stakeholders to provide input throughout the evaluation process. This iterative approach enhances the relevance and validity of the evaluation.

In essence, engaging diverse stakeholders in the evaluation process transforms it from a technical exercise into a collaborative and transformative journey. Each stakeholder's role contributes to a richer understanding of the intervention's impact, creating a symphony of insights that resonates with the complexities of real-world interventions and their effects. The orchestration of diverse perspectives not only enhances the credibility of the evaluation but also fosters a sense of shared ownership, ensuring that the findings are relevant, meaningful, and actionable for all involved.

What Are Key Evaluation Questions (KEQs) in Impact Evaluation?

Crafting precise and relevant Key Evaluation Questions (KEQs) is a crucial step in the impact evaluation process. KEQs serve as guideposts, directing the evaluation towards the most pertinent aspects of the intervention. Examples of KEQs might include:

- What are the short-term and long-term outcomes of the intervention?

- How did contextual factors influence the outcomes?

- To what extent did the intervention reach its target population?

- What unintended consequences, positive or negative, emerged from the intervention?

Why Is an Impact Evaluation Important?

The significance of impact evaluation cannot be overstated. Beyond the quest for accountability, impact evaluations contribute to:

Informed Decision-Making

At its core, impact evaluation serves as a compass for decision-makers navigating the complex terrain of interventions. By providing evidence-based insights, impact evaluations empower decision-makers with a roadmap to discern what works, what doesn't, and why. This informed decision-making is particularly crucial in the allocation of resources, where choices must be optimised to maximise impact.

Whether policymakers are shaping new initiatives or refining existing ones, the findings from impact evaluations guide their choices, ensuring that limited resources are directed toward interventions that yield the most significant and sustainable outcomes. It transforms decision-making from a speculative venture into a strategic process grounded in empirical evidence.

Continuous Improvement

Impact evaluations serve as catalysts for continuous improvement, fostering a culture where interventions evolve, adapt, and refine themselves over time. As findings emerge, stakeholders can identify areas for enhancement, adjusting strategies and approaches based on real-world outcomes. This iterative process ensures that interventions remain dynamic and responsive to the ever-changing needs of the communities they serve.

The insights gleaned from impact evaluations become invaluable feedback loops, guiding program implementers, policymakers, and other stakeholders in refining their interventions. This commitment to continuous improvement distinguishes impactful initiatives from stagnant ones, nurturing a culture where learning from both successes and failures propels interventions toward greater efficacy.

Accountability and Transparency

In an era where accountability is paramount, impact evaluations serve as a testament to an organisation's commitment to transparency and results. Stakeholders, including funders, policymakers, and the public, demand evidence of an intervention's impact. Impact evaluations provide an unbiased, systematic, and transparent account of whether an intervention has achieved its intended outcomes.

By subjecting interventions to rigorous evaluation, organisations demonstrate accountability for the resources entrusted to them. Transparency in sharing evaluation findings builds trust among stakeholders, fostering confidence in the integrity of the intervention process. It transforms accountability from a bureaucratic necessity into a cornerstone of ethical and responsible practice.

Learning and Knowledge Generation

Impact evaluations contribute not only to the understanding of a specific intervention but also to the broader knowledge base. The lessons learned from one evaluation can inform similar interventions in diverse contexts, adding to the collective wisdom of effective strategies. This broader impact extends beyond the immediate stakeholders, benefiting practitioners, researchers, and policymakers globally.

Through the dissemination of evaluation results, the learning derived from successful interventions and the identification of pitfalls enrich the field, guiding future endeavours toward more informed and impactful practices. Impact evaluations thus become a crucial source of knowledge generation, cultivating an environment where evidence-based practices become the cornerstone of effective interventions.

Challenges and Limitations of an Impact Evaluation

While impact evaluation is a powerful tool, it is not without its challenges. Common hurdles include:

a) Attribution and Causality

One of the fundamental challenges in impact evaluation is attributing observed changes directly to the intervention itself. The real-world context is rife with confounding variables—external factors that may influence outcomes independently of the intervention. Separating the signal from the noise and establishing a clear causal link can be akin to navigating a labyrinth, especially in the absence of a controlled experimental setting.

Evaluators grapple with questions such as: Did the observed change truly result from the intervention, or were other factors at play? Addressing attribution challenges often requires sophisticated statistical methods, robust research designs, and a deep understanding of the contextual intricacies that shape outcomes.

b) Contextual Dynamics

The effectiveness of an intervention can be highly context-dependent, adding a layer of complexity to impact evaluations. What works in one setting may not necessarily translate seamlessly to another. Contextual dynamics encompass cultural, economic, social, and political factors that influence how an intervention unfolds and its impact on the target population.

Navigating these contextual intricacies requires evaluators to be keenly aware of the unique features of the environment in which the intervention operates. This may involve conducting context analyses, engaging with local stakeholders, and adapting evaluation methodologies to suit the specific nuances of the setting.

c) Resource Intensity

Rigorous impact evaluations demand considerable resources, both in terms of time and finances. The gold standard of randomised control trials (RCTs), for instance, often requires substantial investments to ensure proper randomisation, data collection, and analysis. In real-world scenarios, however, resource constraints may necessitate compromises in the level of rigour.

Finding the delicate balance between methodological rigour and practical feasibility is an ongoing challenge. This requires evaluators to make strategic decisions about the most efficient use of resources without compromising the validity and reliability of the evaluation findings.

d) Ethical Considerations

Ethical considerations loom large in impact evaluations, particularly when experimental designs involve control groups that do not receive the intervention. Balancing the need for rigorous evaluation with ethical responsibilities to participants raises complex dilemmas. The potential harm to control groups, the equitable distribution of benefits, and the confidentiality of sensitive information are among the ethical considerations evaluators must navigate.

Careful attention to ethical guidelines, obtaining informed consent, and ensuring the well-being and rights of participants are integral components of responsible impact evaluation. Ethical challenges demand a thoughtful and transparent approach to safeguard the rights and dignity of all involved.

How to Transform Challenges into Opportunities

While challenges persist, they also present opportunities for refinement and improvement in the impact evaluation process:

Mixed-Methods Approaches

Integrating both quantitative and qualitative methods can help triangulate findings, providing a more comprehensive understanding of the intervention's impact. Qualitative data can offer insights into the contextual factors influencing outcomes and help interpret quantitative results.

Longitudinal Analysis

Conducting longitudinal analyses allows evaluators to track changes over time, offering a nuanced understanding of the intervention's sustained impact. This helps address the challenge of capturing complex, long-term outcomes.

Sensitivity Analyses

Evaluators can employ sensitivity analyses to assess the robustness of their findings to variations in assumptions or methodological choices. This enhances the credibility of the evaluation by demonstrating the consistency of results across different scenarios.

Community Engagement

Involving the community in the evaluation process helps address contextual dynamics and ensures that the evaluation is culturally sensitive. Community engagement fosters a sense of ownership and provides valuable insights that may otherwise be overlooked.

In navigating the challenges and limitations of impact evaluations, the key lies in recognising them not as roadblocks but as opportunities for improvement. By embracing a dynamic and iterative approach, evaluators can refine methodologies, enhance ethical practices, and contribute to the ongoing evolution of impact evaluation as a robust and adaptive tool for understanding the effects of interventions on the complex tapestry of human systems.

How to Plan and Manage an Impact Evaluation

The success of an impact evaluation hinges on meticulous planning and adept management. Key steps in the process include:

1- Define the Evaluation Scope and Objectives

At the outset, clarity on the goals, scope, and objectives of the evaluation is paramount. Stakeholders must collaboratively define what success looks like and what aspects of the intervention will be assessed. This involves a thorough understanding of the intervention's theory of change, the intended outcomes, and the overarching purpose of the evaluation.

Defining the scope also entails specifying the target population, the duration of the evaluation, and the key indicators that will be measured. This foundational step sets the stage for the entire evaluation process, providing a roadmap for subsequent decisions and actions.

2- Select Appropriate Evaluation Methods

Choosing the most suitable evaluation design and methodologies is a critical decision that hinges on the nature of the intervention and available resources. Consideration should be given to whether a randomised control trial (RCT), quasi-experimental design, or mixed-methods approach is most appropriate.

The selection process involves weighing the trade-offs between internal validity, external validity, and practical feasibility. The goal is to choose methods that provide credible evidence while aligning with the ethical and logistical constraints of the evaluation context.

3- Develop a Monitoring and Evaluation (M&E) Framework

A robust Monitoring and Evaluation (M&E) framework serves as the scaffolding for the evaluation process. It outlines the methods for data collection, identifies relevant indicators, and establishes timelines for different evaluation activities. The M&E framework also delineates the roles and responsibilities of various stakeholders involved in the evaluation.

This step involves creating a detailed plan for data collection tools, sampling methods, and data analysis procedures. It is crucial to ensure that the chosen methods align with the research questions and provide a comprehensive understanding of the intervention's impact.

4- Engage Stakeholders

Stakeholder engagement is not a mere formality but a cornerstone of successful impact evaluations. Involving key stakeholders, including program implementers, beneficiaries, experts, and external evaluators, fosters a collaborative environment. Stakeholders should be engaged from the inception of the evaluation, providing input into the design, implementation, and interpretation of results.

Effective communication and collaboration are essential to align the expectations of different stakeholders and ensure that diverse perspectives contribute to the evaluation's richness. Regular meetings, feedback sessions, and transparent communication channels facilitate this collaborative approach.

5- Collect and Analyse Data

The execution phase involves implementing the data collection plan outlined in the M&E framework. Whether through surveys, interviews, focus group discussions, or a combination of methods, data collection should be systematic and aligned with the evaluation's objectives.

Once data is collected, the analysis phase begins. Quantitative data may undergo statistical analysis, while qualitative data requires coding and thematic analysis. Triangulating findings from multiple sources enhances the reliability and validity of the evaluation results.

6- Iterative Feedback

Rather than waiting until the end of the evaluation, stakeholders should be provided with iterative feedback on interim findings. This allows for adjustments and refinements based on emerging insights. Regular check-ins ensure that the evaluation remains responsive to contextual changes and provides timely information for decision-making.

Feedback sessions also offer an opportunity to engage stakeholders in the interpretation of results, fostering a sense of shared ownership and understanding of the evaluation outcomes.

7- Adaptation Strategies

While the outlined steps provide a structured approach, adaptability is key. Impact evaluations often unfold in dynamic and unpredictable environments. To navigate uncertainties, evaluators may need to adapt their strategies, modify data collection approaches, or adjust timelines.

Additionally, incorporating a learning mindset encourages evaluators to reflect on the process continually. What worked well? What challenges were encountered? These reflections contribute to the evolution of evaluation methodologies and best practices over time.

Table 1: Metrics that need to be measured in an impact evaluation

Metrics | Description |

Short-term outcomes | Immediate changes resulting from intervention. |

Long-term impacts | Profound, lasting transformations reflecting intervention's ultimate success. |

Contextual influences | Understanding how external factors shape outcomes. |

Unintended consequences | Identifying positive or negative outcomes not foreseen. |

Economic efficiency | Evaluating the cost-benefit and cost-effectiveness of interventions. |

What Are the Different Evaluation Methodologies?

Selecting the appropriate evaluation methodology is a critical decision. Common methodologies include:

1- Quantitative Methods

Quantitative methods rely on numerical data to measure and analyse the impact of interventions. Surveys, experiments, and statistical techniques are the instruments of choice in this methodological approach. The goal is to quantify changes, establish patterns, and draw statistical inferences about the intervention's effects.

Surveys

Structured questionnaires allow for the collection of standardised data from a sample population. Surveys are efficient for measuring changes in variables such as knowledge, attitudes, or behaviours.

Experiments

Often associated with randomised controlled trials (RCTs), experiments involve the random assignment of participants to treatment and control groups. This randomisation enables evaluators to attribute observed changes directly to the intervention.

Regression Analysis

Statistical models, such as regression analysis, help identify relationships between variables. This method is valuable for exploring the causal links between the intervention and observed outcomes while controlling for potential confounding factors.

Quantitative methods provide a high level of precision in measuring and quantifying impact, making them particularly suitable for interventions where numerical data can capture changes effectively.

2- Qualitative Methods

Qualitative methods complement quantitative approaches by providing depth and context to the understanding of impact. These methods focus on non-numerical data, offering a nuanced exploration of the experiences, perceptions, and meanings associated with interventions.

Interviews

In-depth interviews allow evaluators to delve into individual perspectives, gathering rich qualitative data. Structured, semi-structured, or unstructured interviews can be employed based on the research questions.

Focus Group Discussions

Group interactions in focus group discussions provide a collective understanding of the intervention's impact. Participants' shared experiences contribute to a deeper comprehension of the contextual dynamics at play.

Case Studies

In-depth examinations of specific cases offer a detailed exploration of the intervention's effects within a particular context. Case studies are valuable for uncovering the complexities and unique aspects of impact.

Qualitative methods are particularly effective in capturing the complexities of human experiences, contextual factors influencing outcomes, and unintended consequences of interventions.

3- Econometric Methods

Econometric methods bring an economic lens to impact evaluation, assessing the economic effects of interventions. These methods employ economic theories and statistical models to measure the impact on economic outcomes, such as income, employment, or productivity.

Cost-Benefit Analysis (CBA)

CBA compares the costs and benefits of an intervention, providing a comprehensive assessment of its efficiency. It quantifies both the monetary and non-monetary impacts, allowing decision-makers to weigh the economic value of the intervention.

Cost-Effectiveness Analysis (CEA)

CEA focuses on comparing the costs of different interventions to achieve a specific outcome. This method is particularly useful when resources are limited, and decision-makers seek to maximise impact within budget constraints.

Econometric methods contribute to a holistic understanding of interventions by shedding light on their economic implications, helping decision-makers assess efficiency and prioritise resource allocation.

4- Mixed-Methods Evaluations

Recognising the strengths of both quantitative and qualitative approaches, mixed-methods evaluations weave together the numerical precision of quantitative data with the contextual richness of qualitative insights.

Triangulation

Triangulation involves comparing findings from different data sources or methods to validate and strengthen the overall understanding of impact. Convergence of evidence from both quantitative and qualitative strands enhances the reliability of the evaluation.

Sequential Exploratory Design

In this design, qualitative data collection and analysis precede quantitative data collection. Qualitative insights guide the development of quantitative measures, ensuring that the evaluation instruments align with the nuances uncovered in qualitative exploration.

Concurrent Embedded Design

In this design, both quantitative and qualitative data are collected simultaneously but with one method taking a more prominent role. The integration of methods occurs during data analysis, providing a comprehensive view of the intervention's impact.

Mixed-methods evaluations offer a holistic perspective, acknowledging that the true impact of interventions often transcends numerical measures and requires an understanding of the broader context.

Deciding on the Best Evaluation Methodology

The choice of methodology depends on the nature of the intervention, the available resources, and the specific questions the evaluation seeks to answer. A mixed-methods approach often proves advantageous, providing a more comprehensive understanding that transcends the limitations of individual methodologies.

Considerations such as the level of control over the intervention, the need for generalisability, and the complexity of the causal pathway should guide the selection process. The key is to strike a balance between methodological rigour and practical feasibility.

Best Practices for Conducting an Impact Evaluation

To ensure the success of an impact evaluation, adhering to best practices is essential:

1- Clear Definition of Success

Before delving into the evaluation process, it is essential to establish a clear definition of success. What are the expected outcomes of the intervention? What indicators will signify success? Defining these benchmarks provides a foundation for assessment, allowing evaluators to measure progress and determine the impact against predetermined criteria.

A shared understanding of success criteria among stakeholders, including program implementers, funders, and beneficiaries, ensures that the evaluation is aligned with the intended goals of the intervention.

2- Randomisation and Control Groups

Whenever feasible, employ randomisation and control groups to establish a credible causal link between the intervention and observed changes. Randomised controlled trials (RCTs) are considered the gold standard in impact evaluation, as the random assignment minimises biases and allows for a rigorous comparison of outcomes.

The use of control groups provides a baseline against which the effects of the intervention can be measured. This methodological approach enhances the internal validity of the evaluation, strengthening the confidence in attributing observed changes to the intervention itself.

3- Longitudinal Analysis

Assessing the long-term effects of interventions requires a longitudinal analysis that extends beyond immediate outcomes. By tracking changes over time, evaluators can capture the sustainability and enduring impact of interventions. This temporal perspective contributes to a more nuanced understanding of how interventions unfold and evolve.

Longitudinal analyses are particularly valuable for interventions with delayed or cumulative effects, offering insights into the trajectories of change beyond the immediate implementation period.

4- Triangulation of Data

Triangulation involves using multiple data sources or methods to corroborate findings, enhancing the reliability and validity of the evaluation. Combining quantitative and qualitative approaches, as well as cross-verifying information from different sources, ensures a more comprehensive understanding of the intervention's impact.

For example, if quantitative data suggests a certain outcome, qualitative data can provide insights into the contextual factors influencing that outcome. Triangulation guards against the limitations of relying on a single method or data source.

5- Ethical Considerations

Ethical considerations are integral to responsible impact evaluation. Balancing the quest for rigorous evidence with ethical principles requires careful attention to the well-being and rights of participants. This involves obtaining informed consent, protecting confidentiality, and minimising potential harm, especially in interventions with control groups.

Ethical considerations extend beyond the data collection phase to encompass the entire evaluation process. Evaluators must navigate the ethical complexities with transparency, ensuring that the benefits of the evaluation outweigh any potential risks.

6- Community Engagement

Engaging with the community throughout the evaluation process fosters a sense of collaboration and ownership. Community members can offer valuable insights into the contextual nuances that may influence the intervention's impact. Their perspectives contribute to a more holistic understanding, ensuring that the evaluation aligns with the needs and aspirations of the community.

Community engagement involves not only seeking input but also providing feedback on interim findings and involving community members in decision-making processes. This inclusive approach enhances the relevance and cultural sensitivity of the evaluation.

7- Iterative Feedback

Rather than waiting until the end of the evaluation, provide stakeholders with iterative feedback on interim findings. This approach allows for adjustments and refinements based on emerging insights. Regular check-ins with stakeholders ensure that the evaluation remains responsive to contextual changes and provides timely information for decision-making.

Iterative feedback sessions also contribute to a sense of shared ownership, where stakeholders actively contribute to the interpretation of results and the refinement of the evaluation process.

8- Adaptation Strategies

Recognising the dynamic nature of interventions and the inherent uncertainties in impact evaluation, evaluators should embrace adaptability. When confronted with unexpected challenges or shifts in the intervention context, be prepared to adjust methodologies, refine research questions, or modify data collection approaches.

Adaptation is not a sign of weakness but a strength that allows evaluators to navigate the complexities of real-world interventions and produce evaluations that are both rigorous and relevant.

By adhering to the principles mentioned above, evaluators can navigate the intricate world of impact assessment, contributing to the generation of evidence that informs decision-making, fosters continuous improvement, and ultimately enhances the impact of interventions on the communities they serve.

Example of Impact Evaluation in Practice

Let's explore a real-world example to illustrate the impact evaluation process in action. Consider a community-based health intervention aiming to reduce the prevalence of a specific disease in a low-income neighbourhood. The intervention involves a combination of health education, access to medical services, and community engagement activities.

Implementation Phase:

At the outset, the program implementers collaborate with local health authorities, community leaders, and healthcare providers to design and implement the intervention. Clear objectives are established, focusing on reducing the incidence of the targeted disease, improving health knowledge, and enhancing access to healthcare services.

Evaluation Design:

Recognising the need for a comprehensive understanding of the intervention's impact, the evaluators opt for a mixed-methods approach. Quantitative data is collected through surveys measuring changes in disease prevalence, health knowledge, and healthcare utilisation. Qualitative data is gathered through interviews and focus group discussions with community members to capture their experiences and perceptions.

Key Evaluation Questions:

The KEQs crafted for this impact evaluation include:

- What is the change in the prevalence of the targeted disease post-intervention?

- How has the health knowledge of the community members evolved?

- To what extent has the intervention improved access to healthcare services?

- Are there unintended consequences or challenges arising from the intervention?

Data Collection and Analysis:

Over a predetermined time frame, data is systematically collected from baseline to post-intervention. The quantitative data is analysed using statistical methods to identify trends and associations, while qualitative data is coded and thematically analysed to extract rich insights.

Findings and Iterative Feedback:

Interim findings are shared with stakeholders periodically, allowing for adjustments and refinements. For instance, if the data reveals a significant increase in disease prevalence despite the intervention, stakeholders can reevaluate the health education component to identify potential shortcomings.

Final Assessment:

At the conclusion of the evaluation, the results are synthesised, providing a holistic understanding of the intervention's impact. The evaluation reveals a notable decrease in disease prevalence, indicating the effectiveness of the health intervention. Additionally, community members report improved health knowledge and increased utilisation of healthcare services.

Conclusion

In conclusion, impact evaluation stands as a pillar in the edifice of evidence-based decision-making. Through a systematic and rigorous process, it unravels the intricate dynamics between interventions and their outcomes, offering valuable insights for policymakers, program implementers, and communities. From defining clear objectives and crafting relevant KEQs to selecting appropriate methodologies and engaging stakeholders, the impact evaluation process demands careful consideration at every step.

Challenges notwithstanding, the importance of impact evaluation in shaping a more informed and impactful future cannot be overstated. As we navigate the complexities of a rapidly changing world, the need for interventions that truly make a difference becomes increasingly apparent. Impact evaluation, with its ability to decipher the nuanced interactions between interventions and outcomes, emerges as a beacon guiding us toward more effective, efficient, and sustainable solutions. It is not merely an evaluative tool but a transformative process, paving the way for positive change and a better-informed tomorrow.

Frequently Asked Questions(FAQ)

What distinguishes outcomes from impacts in impact evaluation?

Outcomes are immediate changes resulting from interventions, while impacts are profound, lasting transformations. Outcomes are the stepping stones toward broader impacts, making the distinction vital for understanding an intervention's true success.

When should an impact evaluation be conducted?

Impact evaluations are valuable when interventions aim for significant and lasting change, are resource-intensive, require understanding of causal relationships, or their success informs future decisions or policies.

Who should be engaged in the impact evaluation process?

Collaboration is key. Engage program implementers, beneficiaries, experts, and external evaluators. Their diverse perspectives enrich the evaluation, providing insights into implementation intricacies, real-world impact, and unbiased perspectives.

Why is an iterative feedback approach essential in impact evaluation?

Iterative feedback allows for adjustments based on emerging insights, ensuring the evaluation remains responsive to contextual changes. It fosters a sense of shared ownership among stakeholders and contributes to a more nuanced interpretation of results.

What are the main challenges in impact evaluation?

Challenges include establishing causal links, navigating contextual dynamics, resource intensity, and ethical considerations. These hurdles highlight the need for refined methodologies, community engagement, and a balance between rigour and practicality.